Adelaide Driving Self-Efficacy Scale (ADSES)

Purpose

The Adelaide Driving Self-Efficacy Scale (ADSES) is a driving self-efficacy assessment. This scale has been developed to assess driving confidence on 12 typical driving tasks.

In-Depth Review

Purpose of the measure

The Adelaide Driving Self-Efficacy Scale (ADSES) is a driving self-efficacy assessment. This scale has been developed to assess driving confidence on 12 typical driving tasks such as parallel parking, driving at night and driving in unfamiliar areas.

Available versions

The ADSES was developed by Dr. Stacey George, Michael Clark and Maria Crotty at Flinders University Department of Rehabilitation Aged and Extended Care in South Australia and was published in 2007.

Features of the measure

Items:

The ADSES is composed of 12 items that measure the levels of confidence of the client towards typical driving behaviours:

- Driving in your local area

- Driving in heavy traffic

- Driving in unfamiliar areas

- Driving at night

- Driving with people in the car

- Responding to road signs/traffic signals

- Driving around a roundabout

- Attempting to merge with traffic

- Turning right across oncoming traffic

- Planning

Planning ability involves anticipating future events, formulating a goal or endpoint, and devising a sequence of steps or actions that will achieve the goal or endpoint" (Anderson, 2008, p. 17)

travel to a new destination - Driving in high speed areas

- Parallel parking

Scoring:

The ADSES is self-scored using a Likert scale

• 1 = strongly disagree

• 2 = disagree

• 3 = undecided

• 4 = agree

• 5 = strongly agree

You will find various options and scaling methods for the number of response choices (1-to-7, 1-to-9, 0-to-4). Odd-numbered scales usually have a middle value that is labelled Neutral or Undecided. Some tools used forced-choice Likert scaling with an even number of responses and no middle neutral or undecided choice. from 0 (no confidence) to 10 (completely confident). The score for each item can then be summed for a total possible score of 120, indicating the highest level of confidence.

Time:

Not reported.

Subscales:

None.

Equipment:

A pen and the test are needed to complete the ADSES.

Training:

No training requirements have been reported since the ADSES is intended to be self-administered.

Alternative forms of the Adelaide driving self-efficacy scale

ADSES–P: A by proxy version has been developed. The only change made from the original ADSES is the phrasing of the initial question: “How confident do you feel your family member can complete the following driving tasks safely?”, instead of: “How confident do you feel doing the following activities

?” (Stapleton, Connolly, & O’Neill, 2012).

A study by Stapleton et al. (2012) showed a significant correlation

between the ADSES and ADSES-P among patients with stroke

Client suitability

Can be used with:

Patients with stroke

Should not be used with:

Not reported.

In what languages is the measure available?

English

Summary

| What does the tool measure? | Self-perceived driving confidence. |

| What types of clients can the tool be used for? | Patients with stroke |

| Is this a screening or assessment tool? |

Assessment |

| Time to administer | Not reported. |

| Versions | ADSES, ADSES–P. |

| Other Languages | None. |

| Measurement Properties | |

| Reliability |

Internal consistency One study reported excellent internal consistency Test-retest: Intra-rater: Inter-rater: |

| Validity |

Content: One paper reported on content validity of the ADSES and noted that items were generated from literature review, clinical experience and expert review. Criterion: Construct: Known groups: |

| Floor/Ceiling Effects | One paper reported a ceiling effect |

| Sensitivity /Specificity |

Not reported. |

| Does the tool detect change in patients? | Not reported. |

| Acceptability | The ADSES is intended to be self-administered and a proxy version has been developed. |

| Feasibility | The ADSES is a self-report scale and does not require any formal training. |

| How to obtain the tool? |

ADSES is available as a Appendix in the following article:

|

Psychometric Properties

Overview

We conducted a literature search to identify all relevant publications examining the psychometric properties of the Adelaide Driving Self-Efficacy Scale. Only two studies have been identified (Stapleton, Connolly & O’Neill, 2012; George, Clark & Crotty, 2007). Additional research on the psychometric properties of this scale is required as most information currently available originates from the authors of the scale.

Floor/Ceiling Effects

Stapleton, Connolly & O’Neill (2012) recruited 46 patients with stroke

Reliability

Internal consistency

George, Clark and Crotty (2007) examined the internal consistency of the ADSES in a sample of 81 patients with stroke

Inter-rater:

No studies have examined the inter-rater reliability

of the ADSES.

Intra-rater:

No studies have examined the intra-rater reliability

of the ADSES.

Test-retest:

No studies have examined the test-retest reliability

of the ADSES.

Validity

Content:

George, Clark and Crotty (2007) conducted a literature review regarding self-efficacy and older drivers, then combined this information with their own clinical experience to generate a list of driving behaviours that can be influenced by medical conditions such as a stroke

was tested by an expert group composed of (i) mobility instructors of the Guide Dogs Association of South Australia and Northern Territory Inc.; (ii) driver-trained occupational therapists; and (iii) the project steering committee, and resulted in a final list of 12 items.

Criterion:

Concurrent:

No studies have examined the concurrent validity

of the ADSES.

Predictive:

Stapleton et al. (2012) recruited 46 patients with stroke

George, Clark and Crotty (2007) examined criterion validity

of the ADSES by comparing ADSES scores with a standardized on-road assessment, in a sample of 45 participants with stroke

Construct:

Convergent/Discriminant:

McNamara, Walker, Ratcliffe & George (2015) examined the convergent validity

of the ADSES and the Driving Habits Questionnaire (DHQ) in a sample of 40 patients with stroke

coefficient. There was a significant relationship between ADSES and three aspects of the DHQ: (i) driving space (r=0.35); (ii) number of kilometers driven per week (r=0.43); and (iii) self-limiting driving (r=0.63).

Stapleton, Connolly & O’Neill (2012) examined convergent validity

of the ADSES and ADSES-P in a sample of 46 patients with stroke

at initial assessment (r=0.707) and at 6-month follow-up (r=0.927). While there was no significant difference in ADSES scores from initial assessment to 6-month follow-up, there was a significant difference in ADSES-P scores between the two time-points (p=0.028).

Known groups:

McNamara, Ratcliffe & George (2014) examined known group validity

of the ADSES in a sample of 40 patients with stroke

George, Clark and Crotty (2007) examined known group validity

of the ADSES by comparing ADSES scores of participants with stroke

Responsiveness

No studies have examined the responsiveness

of the ADSES.

References

- George, S., Clark, M., & Crotty, M. (2007). Development of the Adelaide driving self-efficacy scale. Clinical Rehabilitation, 21(1), 56-61.

- McNamara, A., Ratcliffe, J., & George, S. (2014). Evaluation of driving confidence in post‐stroke older drivers in South Australia.Australasian Journal on Ageing, 33(3), 205-207.

- McNamara, A., Walker, R., Ratcliffe, J., & George, S. (2015). Perceived confidence relates to driving habits post-stroke.Disability and Rehabilitation, 37(14), 1228-1233.

- Stapleton, T., Connolly, D., & O’Neill, D. (2012). Exploring the relationship between self‐awareness of driving efficacy and that of a proxy when determining fitness to drive after stroke. Australian Occupational Therapy Journal, 59(1), 63-70.

See the measure

How to obtain the Adelaide Driving Self-Efficacy Scale

The ADSES is available as a Appendix in the following article:

George S, Clark M, Crotty M (2007). Development of the Adelaide driving self-efficacy scale. Clin Rehabil. Jan;21(1):56-61.

Bells Test

Purpose

The Bells Test is a cancellation test that allows for a quantitative and qualitative assessment of visual neglect in the near extra personal space.

In-Depth Review

Purpose of the measure

The Bells Test is a cancellation test that allows for a quantitative and qualitative assessment of visual neglect in the near extrapersonal space.

Available versions

The Bells Test was developed by Gauthier, Dehaut, and Joanette in 1989.

Features of the measure

Items:

There are no actual items to the Bells Test.

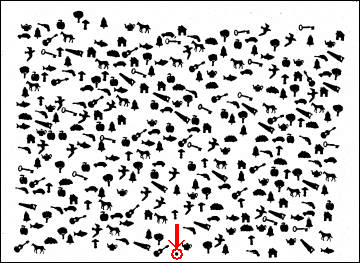

In the Bells Test, the patient is asked to circle with a pencil all 35 bells embedded within 280 distractors (houses, horses, etc.) on an 11 x 8.5 – inch page (Figure 1). All drawings are black. The page is placed at the patient’s midline.

Figure 1. Bells Test

The objects are presented in an apparently random order, but are actually equally distributed in 7 columns containing 5 targets and 40 distractors each. There is a black dot on the bottom of the page to indicate where the page should be placed in relation to the patient’s midsaggital plane. Of the 7 columns, 3 are on the left side of the sheet, 1 is in the middle, and 3 are on the right. Therefore, if the patient omits to circle bells in the last column on the left, we can estimate their neglect is mild. However, omissions in the more centered columns can suggest a greater neglect of the left side of space.

The examiner is seated facing the patient. First a demonstration sheet is presented to the patient. This sheet contains an oversized version of each of the distractors and one circled bell. The patient is asked to name the images indicated by the examiner in order to ensure proper object recognition. If the patient experiences language difficulties or if the examiner suspects comprehension problems, the patient can instead place a card representing that object over the actual image.

The examiner gives the following instructions: “Your task will consist of circling with the pencil all the bells that you will find on the sheet that I will place in front of you without losing time. You will start when I say “go” and stop when you feel you have circled all the bells. I will also ask you to avoid moving or bending your trunk if possible.” If a comprehension problem is present, the examiner has to demonstrate the task.

The test is then placed in front of the patient with the black dot (see arrow on Figure 1) on the subject’s side, centered on his midsagittal plane (divides the body into right and left halves). The test sheet is given after the instructions.

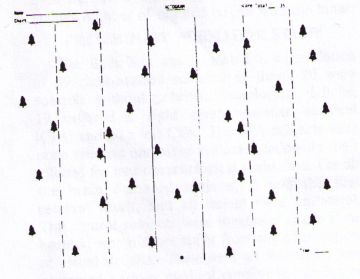

The examiner holds the scoring sheet (Figure 2) away from the patient’s view, making sure the middle dot is towards the patient. This upside-down position will make scoring easier for the examiner. After the patient begins the test, the examiner records the order of the bells circled by the patient by numbering the order on his/her scoring sheet (e.g. 1, 2, 3,…). If the patient circles another image (an image that is not a bell), the examiner indicates on his/her scoring sheet the appropriate number and the approximate location. The subsequent bell receives the next number.

Figure 2. Examiner scoring sheet.

If the patient stops before all the bells are circled, the examiner gives only one warning by saying “are you sure all the bells are now circled? Verify again.” After that, the order of numbering continues, but the numbers are circled or underlined. The task is completed when the patient stops his/her activity.

Scoring:

The total number of circled bells is recorded as well as the time taken to complete. The maximum score is 35. An omission of 6 or more bells on the right or left half of the page indicates USN. Judging by the spatial distribution of the omitted targets, the evaluator can then determine the severity of the visual neglect and the hemispace affected (i.e. left or right).

The sequence by which the patient proceeds during the scanning task can be determined by connecting the bells of the scoring sheet according to the order of the numbering.

Time:

Less than 5 minutes.

Training:

None typically reported.

Subscales:

None.

Equipment:

- The test paper (8.5″x11″ page with 35 bells embedded within 280 distractors).

- Pencil

- Score sheet

- Stopwatch

Alternative forms of the Bell’s Test

None.

Client suitability

Can be used with:

Patients with stroke

- Patients must be able to hold a pencil to complete the test (the presence of apraxia may impair this ability).

- Patients must be able to visually discriminate between distractor items, such as the images of houses and horses, and the bells that are to be cancelled.

Should not be used with:

- As with other cancellation tests, the Bells Test cannot be used to differentiate between sensory neglect and motor neglect because it requires both visual search and manual exploration (LÀdavas, 1994).

- The Bells Test cannot be completed by proxy.

In what languages is the measure available?

Not applicable.

Summary

| What does the tool measure? | Unilateral Spatial Neglect (USN) in the near extra personal space. |

| What types of clients can the tool be used for? | Patients with stroke |

| Is this a screening or assessment tool? |

Screening . |

| Time to administer | Less than 5 minutes. |

| Versions | None. |

| Other Languages | Not applicable. |

| Measurement Properties | |

| Reliability |

Internal consistency No studies have examined the internal consistency Test-retest: |

| Validity |

Criterion: One study reported that the Bells Test is more likely to identify the presence of neglect than the Albert’s Test in patients with stroke Construct: |

| Does the tool detect change in patients? | Not applicable. |

| Acceptability | The Bells Test should be used as a screening tool rather than for clinical diagnosis of USN. Apraxia must be ruled out as this may affect the validity of test results. This test cannot be completed by proxy. Patients must be able to hold a pencil and visually discriminate between distractor items to complete. The measure cannot be used to differentiate between sensory neglect and motor neglect. |

| Feasibility | The Bells Test requires no specialized training to administer and only minimal equipment is required (a pencil, a stopwatch, the test paper and scoring sheet). The test is simple to score and interpret. The test is placed at the patient’s midline and a demonstration sheet is used to familiarize the patient with the images used in the test. The examiner is required to follow along with the patient as they circle each bell, and record on the scoring sheet the order in which the bells are cancelled. Upon completion of the test, the examiner must count the number of bells cancelled out of a total of 35, and record the time taken by the patient. An omission of 6 or more bells on the right or left half of the page indicates USN. |

| How to obtain the tool? |

You can download a copy: Bell’s Test

|

Psychometric Properties

Overview

A review of the Bells Test reported that the measure has excellent test-retest reliability

than Line Bisection Test (Marsh & Kersel, 1993; Azouvi et al., 2002).

For the purposes of this review, we conducted a literature search to identify all relevant publications on the psychometric properties of the Bells Test.

Reliability

Internal consistency

No studies have examined the internal consistency

Test-retest:

No studies have examined the test-retest reliability

of the Bells Test.

Validity

Criterion:

Vanier et al. (1990) administered the Bells Test and the Albert’s Test to 40 neurologically healthy adults, and 47 patients with right brain stroke

Ferber and Karnath (2001) examined the ability of various cancellation and line bisection tests to detect the presence of neglect in 35 patients with spatial neglect. The Bells Test detected a significantly higher percentage of omitted targets than the other tests (Line Bisection Test, and 3 cancellation tests: Letter Cancellation Test, Star Cancellation Test and Line Crossing Test). The Line Bisection Test missed 40% of patients with spatial neglect. The Letter Cancellation Test and the Bells Test missed only 6% of the cases.

Construct:

Known groups:

Gauthier et al. (1989) examined the Bells Test in 59 subjects, of which 20 were controls, 19 had right cerebral lesions and 20 had left cerebral lesions. A statistically significant difference in mean scores between the group with right cerebral lesions and the group with left cerebral lesions was observed.

Responsiveness

No studies have examined the responsiveness

of the Bells Test.

References

- Azouvi, P., Samuel, C., Louis-Dreyfus, A., et al. (2002). Sensitivity of clinical and behavioural tests of spatial neglect after right hemisphere stroke. J Neurol Neurosurg Psychiatry, 73, 160 -166.

- Ferber, S., Karnath, H. O. (2001). How to assess spatial neglect–Line Bisection or Cancellation Tests? J Clin Expl Neuropsychol, 23, 599-607.

- Gauthier, L., Dehaut, F., Joanette, Y. (1989). The Bells Test: a quantitative and qualitative test for visual neglect. Int J Clin Neuropsychol, 11, 49-54.

- LÃ davas, E. (1994). The role of visual attention in neglect: A dissociation between perceptual and directional motor neglect. Neuropsychological Rehabilitation, 4, 155-159.

- Marsh, N. V., Kersel, D. A. (1993). Screening tests for visual neglect following stroke. Neuropsychological Rehabilitation, 3, 245-257.

- Menon, A., Korner-Bitensky, N. (2004). Evaluating unilateral spatial neglect post stroke: Working your way through the maze of assessment choices. Topics in Stroke Rehabilitation, 11(3), 41-66.

- Vanier, M., et al. (1994). Evaluation of left visuospatial neglect: norms and discrimination power of the two tests. Neuropsychologia, 4, 87-96.

See the measure

How to obtain the Bell’s Test

You can download

Motor-Free Visual Perception Test (MVPT)

Purpose

The Motor-Free Visual Perception Test (MVPT) is a widely used, standardized test of visual perception. Unlike other typical visual perception measures, this measure is meant to assess visual perception independent of motor ability. It was originally developed for use with children (Colarusso & Hammill, 1972), however it has been used extensively with adults. The most recent version of the measure, the MVPT-3, can be administered to children (> 3 years), adolescents, and adults (< 95 years) (Colarusso & Hammill, 2003).

In-Depth Review

Purpose of the measure

The Motor-Free Visual Perception Test (MVPT) is a widely used, standardized test of visual perception. Unlike other typical visual perception measures, this measure is meant to assess visual perception independent of motor ability. It was originally developed for use with children (Colarusso & Hammill, 1972), however it has been used extensively with adults. The most recent version of the measure, the MVPT-3, can be administered to children (> 3 years), adolescents, and adults (< 95 years) (Colarusso & Hammill, 2003).

The MVPT can be used to determine differences in visual perception across several different diagnostic groups, and is often used by occupational therapists to screen those with stroke

Available versions

Original MVPT

The original MVPT was published by Colarusso and Hammill in 1972.

MVPT – Revised Version (MVPT-R)

The MVPT-R was published by Colarusso and Hammill in 1996. In this version, four new items were added to the original MVPT version (40 items in total). Age-range norms (U.S.) were also added to the original MVPT, to include children up to the age of 12. No adult data were collected when the scale was developed, however, the MVPT-R has been used with both pediatric and adult populations (Brown, Rodger, & Davis, 2003). While the MVPT-R has been reported to have an excellent correlation

with the original MVPT (r = 0.85, Colarusso and Hammill, 1996), Brown et al. (2003) caution that no other reliability

and validity

data have been reported for this version.

MVPT – 3rd Edition (MVPT-3)

The MVPT-3 was published by Colarusso and Hammill in 2003. The MVPT-3 was a major revision of the MVPT-R, and includes additional test items that allow for the assessment of visual perception in adults and adolescents. The MVPT-3 is intended for individuals between the ages of 4-95, and takes approximately 25 minutes to administer. (http://www4.parinc.com/Products/Product.aspx?ProductId=MVPT-3)

Features of the measure

Items:

The items for the original MVPT, MVPT-R and MVPT-3 are comprised of items representing 5 visual domains:

Source: Colarusso & Hammill, 1996

| Visual Discrimination | The ability to discriminate dominant features in different objects; for example, the ability to discriminate position, shapes, forms, colors and letter-like positions. |

| Visual Figure-Ground | The ability to distinguish an object from its background. |

| Visual Memory | The ability to recall dominant features of one stimulus item or to remember the sequence of several items. |

| Visual Closure | The ability to identify incomplete figures when only fragments are presented. |

| Visual Spatial | The ability to orient one’s body in space and to perceive the positions of objects in relation to oneself and to objects. |

Note: Five domains do not represent different subscales or subtests and thus cannot be used to yield individual scores.

Original MVPT

Contains 36 items.

MVPT-R

Contains 40 items. Since the MVPT-R includes children up to 12 years old, four items were added to the items of the original MVPT to accommodate the increased age-range covered by the norms of the MVPT-R.

MVPT-3

Contains 65 items. Before administering the MVPT-3, the examiner must ask for the patient’s date of birth and compute their age. This will determine where in the test one should begin. Children between the ages of 4-10 begin with the first example item and complete items 1-40. Individuals between the ages of 11-95 begin with the third example item and complete items 14-65. All of the items that fall within an individual’s age group must be administered.

Each item consists of a black-and-white line drawing stimulus, along with four multiple-choice response options (A, B, C, D) from which to choose the item that matches the example. For most items, the stimulus and response choices appear on the same page. The stimulus drawing appears at the top of the page above a row of four multiple-choice options (see image below).

Below are four examples of test items and their corresponding multiple-choice response options:

Items assessing visual memory have the stimulus and multiple-choice options presented on separate pages. For these items, the stimulus page is presented for 5 seconds, removed, and the options page is then presented. Items with similar instructions are grouped together in order of increasing difficulty. The patient points to or says the letter that corresponds to the desired answer (Su et al. 2000). The examiner records each response on the recording form.

To ensure that the patient understands the task instructions, example items are presented for each new set of instructions. Examiners must ensure that the patient understands these directions before proceeding to the next domain.

Subscales:

None.

Equipment:

Original MVPT and MVPT-R

Materials for the test include the manual that describes the administration and scoring procedures, the test plate book, score sheet, stopwatch and a pencil (Brown et al., 2003).

MVPT-3

Materials for the test include the manual that describes administration and scoring procedures, a recording form to record patient responses, and a spiral-bound test plates easel.

Training:

Various health professionals, including occupational therapists, teachers, school psychologists, and optometrists, can administer all versions of the MVPT. Only individuals familiar with both the psychometric properties and the score limitations of the test should conduct interpretations (Colarusso & Hammill, 2003).

Time:

Original MVPT and MVPT-R

The test takes 10-15 minutes to administer, and 5 minutes to score (Brown et al., 2003).

MVPT-3

According to the manual, the MVPT-3 takes approximately 20 to 30 minutes to administer and approximately 10 minutes to score.

Scoring:

Original MVPT and MVPT-R

One point is given for each correct response. Raw scores are then converted to age and perceptual equivalents to allow for a comparison of the patient’s performance to that of a normative group of same-aged peers.

MVPT-3

A single raw score is formed, representing the patients overall visual perceptual ability. The raw score is calculated by subtracting the number of errors made from the number of the last item attempted. The total scores range from 55-145. Higher scores reflect fewer deficits in general visual perceptual function. The raw score can then be converted to standard scores, age equivalents, and percentile ranks using the norm tables provided in the manual, which will allow for the comparison of a patient’s performance to that of a normative group of same-aged peers.

Alternative form of the MVPT

- MVPT – Vertical Version (MVPT-V) (Mercier, Hebert, Colarusso, & Hammill, 1996).

Response sets are presented in a vertical layout rather than the horizontal layout found in other versions of the MVPT. This layout allows for an accurate assessment of visual perceptual abilities in adults who have hemifield visual neglect, commonly found in patients with strokeAlso called a "brain attack" and happens when brain cells die because of inadequate blood flow. 20% of cases are a hemorrhage in the brain caused by a rupture or leakage from a blood vessel. 80% of cases are also know as a "schemic stroke", or the formation of a blood clot in a vessel supplying blood to the brain. or traumatic brain injury. These patients are unable to attend to a portion of the visual field, and may therefore miss any answer choices that are presented in one part of the visual field when they are presented horizontally. The MVPT-V contains 36 items. Mercier, Herbert, and Gauthier (1995) reported excellent test-retest reliability A way of estimating the reliability of a scale in which individuals are administered the same scale on two different occasions and then the two scores are assessed for consistency. This method of evaluating reliability is appropriate only if the phenomenon that the scale measures is known to be stable over the interval between assessments. If the phenomenon being measured fluctuates substantially over time, then the test-retest paradigm may significantly underestimate reliability. In using test-retest reliability, the investigator needs to take into account the possibility of practice effects, which can artificially inflate the estimate of reliability (National Multiple Sclerosis Society).

for the MVPT-V (ICC = 0.92).

Note: The MVPT-V removes unilateral visual neglect as a variable in test performance and therefore should not be used to assess driving ability (Mazer, Korner-Bitensky, & Sofer, 1998).

Client suitability

Can be used with:

- Patients with stroke

Also called a "brain attack" and happens when brain cells die because of inadequate blood flow. 20% of cases are a hemorrhage in the brain caused by a rupture or leakage from a blood vessel. 80% of cases are also know as a "schemic stroke", or the formation of a blood clot in a vessel supplying blood to the brain.. - The MVPT can be used in patients with expressive aphasia

Aphasia is an acquired disorder caused by an injury to the brain and affects a person's ability to communicate. It is most often the result of stroke or head injury.

An individual with aphasia may experience difficulty expressing themselves when speaking, difficulty understanding the speech of others, and difficulty reading and writing. Sadly, aphasia can mask a person's intelligence and ability to communicate feelings, thoughts and emotions. (The Aphasia Institute, Canada) if they are able to understand instructions and the various sub-scale requirements.

Should not be used in:

- Children under the age of 4.

- The MVPT calculates a global score and thus provides less information regarding specific visual dysfunction than a scale that provides domain-specific scores (Su et al., 2000). To assess various domains of visual perceptual ability, an alternative with good pscyhometric properties is the Rivermead Perceptual Assessment Battery. It has 16 different subtests assessing various aspects of visual perception. It takes between 45-50 minutes to administer and has established reliability

Reliability can be defined in a variety of ways. It is generally understood to be the extent to which a measure is stable or consistent and produces similar results when administered repeatedly. A more technical definition of reliability is that it is the proportion of "true" variation in scores derived from a particular measure. The total variation in any given score may be thought of as consisting of true variation (the variation of interest) and error variation (which includes random error as well as systematic error). True variation is that variation which actually reflects differences in the construct under study, e.g., the actual severity of neurological impairment. Random error refers to "noise" in the scores due to chance factors, e.g., a loud noise distracts a patient thus affecting his performance, which, in turn, affects the score. Systematic error refers to bias that influences scores in a specific direction in a fairly consistent way, e.g., one neurologist in a group tends to rate all patients as being more disabled than do other neurologists in the group. There are many variations on the measurement of reliability including alternate-forms, internal consistency , inter-rater agreement , intra-rater agreement , and test-retest .

(Bhavnani, Cockburn, Whiting & Lincoln, 1983) and validityThe degree to which an assessment measures what it is supposed to measure.

(Whiting, Lincoln, Bhavnani & Cockburn, 1985) and was designed to assess visual perception problems in patients with strokeAlso called a "brain attack" and happens when brain cells die because of inadequate blood flow. 20% of cases are a hemorrhage in the brain caused by a rupture or leakage from a blood vessel. 80% of cases are also know as a "schemic stroke", or the formation of a blood clot in a vessel supplying blood to the brain. (Whiting et al., 1985). - The is administered via direct observation of task completion and cannot be used with a proxy respondent.

- The MVPT-3 should be used only as a screening

Testing for disease in people without symptoms.

instrument with 4-year-old children but can be used for diagnostic purposes in all other age groups (Colarusso & Hammill, 2003). - McCane (2006) argues that although Colarusso and Hammill (2003) state that the MVPT-3 can be used as a diagnostic tool in all age groups other than in four year olds, even more cautious interpretation is needed. This is based on the generally accepted notion that the reliability

Reliability can be defined in a variety of ways. It is generally understood to be the extent to which a measure is stable or consistent and produces similar results when administered repeatedly. A more technical definition of reliability is that it is the proportion of "true" variation in scores derived from a particular measure. The total variation in any given score may be thought of as consisting of true variation (the variation of interest) and error variation (which includes random error as well as systematic error). True variation is that variation which actually reflects differences in the construct under study, e.g., the actual severity of neurological impairment. Random error refers to "noise" in the scores due to chance factors, e.g., a loud noise distracts a patient thus affecting his performance, which, in turn, affects the score. Systematic error refers to bias that influences scores in a specific direction in a fairly consistent way, e.g., one neurologist in a group tends to rate all patients as being more disabled than do other neurologists in the group. There are many variations on the measurement of reliability including alternate-forms, internal consistency , inter-rater agreement , intra-rater agreement , and test-retest .

of a tool should be > 0.90 to be used for diagnostic and decision-making purposes (Sattler, 2001). Therefore, the MVPT-3 should only be used as a diagnostic tool in adolescents between 14-18 years old because this is the only age group in which reliabilityReliability can be defined in a variety of ways. It is generally understood to be the extent to which a measure is stable or consistent and produces similar results when administered repeatedly. A more technical definition of reliability is that it is the proportion of "true" variation in scores derived from a particular measure. The total variation in any given score may be thought of as consisting of true variation (the variation of interest) and error variation (which includes random error as well as systematic error). True variation is that variation which actually reflects differences in the construct under study, e.g., the actual severity of neurological impairment. Random error refers to "noise" in the scores due to chance factors, e.g., a loud noise distracts a patient thus affecting his performance, which, in turn, affects the score. Systematic error refers to bias that influences scores in a specific direction in a fairly consistent way, e.g., one neurologist in a group tends to rate all patients as being more disabled than do other neurologists in the group. There are many variations on the measurement of reliability including alternate-forms, internal consistency , inter-rater agreement , intra-rater agreement , and test-retest .

exceeds 0.90. - The MVPT-V removes unilateral visual neglect as a variable in test performance and therefore should not be used to assess driving ability (Mazer et al., 1998).

In what languages is the measure available?

No information is currently available regarding the languages in which the instructions to the MVPT have been translated.

Note: Because the test requires no use of verbal response by the respondent, if the clinician can determine through the use of the practice items that the individual understands the task requirements, then it is possible to use the test with minimal language use.

Summary

| What does the tool measure? | Visual perception independent of motor ability. |

| What types of clients can the tool be used for? | The MVPT can be used to determine differences in visual perception across several different diagnostic groups, and is often used by occupational therapists to screen those with stroke The MVPT was originally developed for use with children, however it has been used extensively with adults. |

| Is this a screening or assessment tool? |

Assessment. |

| Time to administer | The MVPT and MVPT-R takes 10-15 minutes to administer, and 5 minutes to score. The MVPT-3 takes approximately 20 to 30 minutes to administer and approximately 10 minutes to score. |

| Versions | Original MVPT; MVPT Revised version (MVPT-R); MVPT 3rd edition (MVPT-3); MVPT Vertical Version (MVPT-V). |

| Other Languages |

No information is currently available regarding the languages in which the instructions to the MVPT have been translated. Note: Because the test requires no use of verbal response by the respondent, if the clinician can determine through the use of the practice items that the individual understands the task requirements, then it is possible to use the test with minimal language use. |

| Measurement Properties | |

| Reliability |

Internal consistency One study examined the internal consistency Test-retest: |

| Validity |

Content: One study examined the content validity of the original MVPT. Criterion: Predictive: Construct: Known Groups: |

| Does the tool detect change in patients? | No studies have examined the responsiveness of the MVPT. |

| Acceptability | The MVPT is a short and simple measure and has been reported as well tolerated by patients. The test is administered by direct observation and is not suitable for proxy use. |

| Feasibility | The MVPT has standardized instructions for administration in an adult population and requires the test plates and manual. Only individuals familiar with both the psychometric properties and the score limitations of the test should conduct interpretations. |

| How to obtain the tool? | The MVPT can be purchased from: https://www.therapro.com/ |

Psychometric Properties

Overview

The reliability

and validity

of the MVPT has not been well studied. To our knowledge, the creators of the MVPT have personally gathered the majority of psychometric data that are currently published on the scale. In addition, the majority of the existing psychometric studies have been conducted using the original MVPT only, and few studies have examined the validity of the MVPT-R and MVPT-3. Further investigation on the reliability and validity

of the original MVPT, MVPT-R and MVPT-3 is therefore recommended.

Reliability

Original MVPT

Internal consistency

Colarusso and Hammill (1996) calculated the internal consistency

.

Test-retest:

Colarusso and Hammill (1972) examined the test-retest reliability

, ranging from r = 0.77 to r = 0.83 at different age levels, with a mean coefficient of r = 0.81 for the total sample.

Inter-rater:

Has not been investigated.

MVPT-R

Internal consistency

Has not been investigated.

Test-retest:

Only one study evaluating the reliability

of the MVPT-R has been reported in the literature. Burtner, Qualls, Ortega, Morris, and Scott (2002) administered the MVPT-R to a group of 38 children with learning disabilities and 37 children with age-appropriate development (aged 7 to 10 years) on two separate occasions within 2.5 weeks. Intraclass correlation

coefficients (ICCs) for perceptual quotient scores ranged from adequate to excellent (ranging from ICC = 0.63 to ICC = 0.79). Perceptual age scores also ranged from adequate to excellent (ICC = 0.69 to ICC = 0.86). Pearson product moment correlations for perceptual quotient scores ranged from adequate to excellent (r = 0.70 to r = 0.80) and perceptual age scores were excellent, ranging from r = 0.77 to r = 0.87. These results suggest that the MVPT-R has adequate test-retest reliability

with more stability in visual perceptual scores for children with learning disabilities.

Inter-rater:

Has not been investigated.

MVPT-3

Internal consistency

Colarusso and Hammill (2003) computed Cronbach’s coefficient alphas for each age group. Alpha coefficients ranged from poor to excellent (alpha = 0.69 to alpha = 0.90). In children aged 4, 5, and 7, alpha coefficients were 0.69, 0.76, and 0.73, respectively. Reliability

coefficients for all other age groups were excellent (alpha’s exceed 0.80).

Test-retest:

Colarusso and Hammill (2003) examined the test-retest reliability

(0.87 and 0.92, respectively), suggesting that the MVPT-3 is relatively stable over time.

Inter-rater:

Has not been investigated.

Validity

Content:

Only the content validity

of the original MVPT has been provided.

The content of the MVPT was based on item analyses as well as the five visual perceptual categories proposed by Chalfant and Scheffelin (1969). The authors examined item bias, including the effects of gender, residence, and ethnicity. Performance on each item was compared for differing groups to determine any biased content. Only three items appeared to function differently based on group membership. The authors examined these items and chose not to eliminate the items based on other psychometric data.

Criterion:

Concurrent:

Original MVPT

The following information is from a review article by Brown, Rodger, and Davis (2003):

- Correlations between the MVPT and the Frostig Developmental Test of Visual Perception ranged from adequate to excellent, from r = 0.38 to r = 0.60 (Frostig, Lefever, & Whittlesey, 1966).

- Correlations between the MVPT and the Developmental Test of Visual Perception ranged from poor to excellent, r = 0.27 to r = 0.74 (Hammill, Pearson, & Voress, 1993).

- Correlation

The extent to which two or more variables are associated with one another. A correlation can be positive (as one variable increases, the other also increases - for example height and weight typically represent a positive correlation) or negative (as one variable increases, the other decreases - for example as the cost of gasoline goes higher, the number of miles driven decreases. There are a wide variety of methods for measuring correlation including: intraclass correlation coefficients (ICC), the Pearson product-moment correlation coefficient, and the Spearman rank-order correlation.

between the MVPT and the Matching subscaleMany measurement instruments are multidimensional and are designed to measure more than one construct or more than one domain of a single construct. In such instances subscales can be constructed in which the various items from a scale are grouped into subscales. Although a subscale could consist of a single item, in most cases subscales consist of multiple individual items that have been combined into a composite score (National Multiple Sclerosis Society).

of Metropolitan Readiness Tests was adequate, r = 0.40 (Hildreth, Griffiths, & McGauvran, 1965). - Correlations between the MVPT and the Word Study Skills and Arithmetic subscales of Stanford Achievement Tests (Primary) were adequate, from r = 0.37 to r = 0.42 (Kelly, Madden, Gardner, & Rudman, 1964).

- Correlations between the MVPT and the Durrell Analysis of Reading Difficulties were adequate, ranging from r = 0.33 to r = 0.46 (Durrell, 1955).

- Correlation

The extent to which two or more variables are associated with one another. A correlation can be positive (as one variable increases, the other also increases - for example height and weight typically represent a positive correlation) or negative (as one variable increases, the other decreases - for example as the cost of gasoline goes higher, the number of miles driven decreases. There are a wide variety of methods for measuring correlation including: intraclass correlation coefficients (ICC), the Pearson product-moment correlation coefficient, and the Spearman rank-order correlation.

between the MVPT and the Slosson Intelligence Test was adequate, r = 0.31 (Slosson, 1963). - Correlation

The extent to which two or more variables are associated with one another. A correlation can be positive (as one variable increases, the other also increases - for example height and weight typically represent a positive correlation) or negative (as one variable increases, the other decreases - for example as the cost of gasoline goes higher, the number of miles driven decreases. There are a wide variety of methods for measuring correlation including: intraclass correlation coefficients (ICC), the Pearson product-moment correlation coefficient, and the Spearman rank-order correlation.

between the MVPT and the Pinter-Cunningham Primary Intelligence Test was adequate, r = 0.32 (Pintner & Cunningham, 1965).

Colarusso and Hammill (1996) concluded that the MVPT measures the construct of visual perception adequately because the MVPT correlated more highly with measures of visual perception (median r = 0.49) than it did with tests of intelligence (median r = 0.31) or school performance (median r = 0.38).

Predictive:

Original MVPT

Mazer et al. (1998) examined whether the MVPT could predict on-road driving outcome in 84 patients with stroke

Korner-Bitensky et al. (2000) also examined whether the MVPT could predict on-road driving test outcome in 269 patients with stroke

Ball et al. (2006) examined whether scores on the visual closure items of the MVPT were predictive of future at-fault motor vehicle collisions in a cohort of older drivers (over the age of 55). The MVPT was found to be predictive, such that individuals who made four or more errors on the MVPT were 2.10 times more likely to crash as those who made three or fewer errors.

Construct:

Convergent/Discriminant:

Original MVPT

Su et al. (2000) found excellent correlations between the MVPT and the Loewenstein Occupational Therapy Cognitive Assessment subscales of Visuo-motor organization and Thinking operations (r = 0.70 and 0.72). Adequate correlations between the Rivermead Perceptual Assessment Battery subscales of Sequencing

(0.39) and Figure-ground discrimination (0.41) were found. An excellent correlation

between the MVPT and the Spatial awareness subscale

of the Rivermead Perceptual Assessment Battery was observed (0.72).

Cate and Richards (2000) investigated the relationship between basic visual functions (acuity, visual field deficits, oculomotor skills and visual attention/scanning) and higher-level visual-perceptual processing skills (visual closure and figure-ground discrimination) in patients with stroke

analysis. An excellent correlation

of r = 0.75 was observed between vision screening

scores and scores from the MVPT.

Known groups:

Original MVPT

Su et al. (2000) compared the perceptual performance of 22 patients with intracerebral hemorrhage to 22 patients with ischemia early after stroke. The MVPT was not found to be discriminatively sensitive to side of lesion (left or right) or type of lesion (intracerebral hemorrhage vs. ischemic).

York and Cermak (1995) examined the performance of 45 individuals with either right cerebrovascular accident, left cerebrovascular accident, or individuals without cerebrovascular accident using the MVPT. Patients with right hemisphere lesions demonstrated poor performance on the MVPT in comparison to patients with left hemisphere lesions and a non-stroke group. However, the degree of difference between the mean scores of each group, as calculated using effect size

, (ES = 0.67 and 0.54, respectively), suggesting that the MVPT can discriminate between patients with stroke

MVPT-3

Colarusso and Hammill (2003) examined MVPT-3 performance differences among individuals who were developmentally delayed, or who had experienced a head injury, or had a learning disability and compared their MVPT-3 performance to the general population mean MVPT-3 score of 100. It was hypothesized that each of these groups should display lower scores on the MVPT-3 when compared to the general population. Individuals classified as developmentally delayed had a mean MVPT-3 score of 69.46 which falls more than two standard deviations below the mean. Individuals with head injury had a mean score of 80.16, falling approximately 1.33 standard deviations below the mean. The group with learning disability had an average score of 88.24. The lower MVPT-3 scores for each of these three groups lends support for the construct validity

of the test.

Responsiveness

Not applicable.

References

- Ball, K. K., Roenker, D. L., Wadley, V. G., Edwards, J. D., Roth, D. L., McGwin, G., Raleigh, R., Joyce, J. J., Cissell, G. M., Dube, T. (2006). Can high-risk older drivers be identified through performance-based measures in a department of motor vehicles setting? Journal of the American Geriatrics Society, 54, 77-84.

- Bhavnani, G., Cockburn, J., Whiting, S., Lincoln, N. (1983). The reliability of the Rivermead perceptual assessment. British Journal of Occupational Therapy, 52, 17-19.

- Bouska, M. J., Kwatny, E. (1982). Manual for the application of the motor-free visual perception test to the adult population. Philadelphia (PA): Temple University Rehabilitation Research and Training Center.

- Brown, T. G., Rodger, S., Davis, A. (2003). Motor-Free Visual Perception Test – Revised: An overview and critique. British Journal of Occupational Therapy, 66(4), 159-167.

- Burtner, P. A., Qualls, C., Ortega, S. G., Morris, C. G., Scott, K. (2002). Test-retest of the Motor-Free Visual Perception Test Revised (MVPT-R) in children with and without learning disabilities. Physical and Occupational Therapy in Pediatrics, 22(3-4), 23-36.

- Cate, Y., Richards, L. (2000). Relationship between performance on tests of basic visual functions and visual-perceptual processing in persons after brain injury. Am J Occup Ther, 54(3), 326-334.

- Chalfant, J. C., Scheffelin, M. A. (1969). Task force III. Central processing dysfunctions in children: a review of research. Bethesda, MD: US Department of Health, Education and Welfare.

- Colarusso, R. P., Hammill, D. D. (1972). Motor-free visual perception test. Novato CA: Academic Therapy Publications.

- Colarusso, R. P., Hammill, D. D. (1996). Motor-free visual perception test–revised. Novato CA: Academic Therapy Publications.

- Colarusso, R. P., & Hammill, D.D. (2003). The Motor Free Visual Perception Test (MVPT-3). Navato, CA: Academic Therapy Publications.

- Durrell, D. (1955). Durrell Analysis of Reading Difficulty. Tarrytown, New York: Harcourt, Brace and World.

- Frostig, M., Lefever, D. W., Whittlesey, J. R. B. (1966). Administration and scoring manual for the Marianne Frostig Developmental Test of Visual Perception. Palo Alto, CA: Consulting Psychologists Press.

- Hammill, D. D., Pearson, N. A., Voress, J. K. (1993). Developmental Test of Visual Perception. 2nd ed. Austin, TX: Pro Ed.

- Hildreth, G. H., Griffiths, N. L., McGauvran, M. E. (1965). Metropolitan Readiness Tests. New York: Harcourt, Brace and World.

- Korner-Bitensky, N. A., Mazer, B. L., Sofer, S., Gelina, I., Meyer, M. B., Morrison, C., Tritch, L., Roelke, M. A., White, M. (2000). Visual testing for readiness to drive after stroke: A multiscenter study. Am J Phys Med Rehabil, 79(3), 253-259.

- Kelly, T. L., Madden, R., Gardner, E. F., Rudman, H. C. (1964). Stanford Achievement Tests. New York: Harcourt, Brace and World.

- Mazer, B. L., Korner-Bitensky, N., Sofer, S. (1998). Predicting ability to drive after stroke. Arch of Phys Med Rehabil, 79, 743-750.

- McCane, S. J. (2006). Test review: Motor-Free Visual Perception Test.Journal of Psychoeducational Assessment, 24, 265-272.

- Mercier, L., Herbert, R., Gauthier, L. (1995). Motor free visual perception test: Impact of vertical answer cards position on performance of adults with hemispatial neglect. Occup Ther J Res, 15, 223-226.

- Mercier, L., Hebert, R., Colarusso, R., Hammill, D. (1996). Motor-Free Visual Perception Test – Vertical. Novato, CA: Academic Therapy Publications.

- Pintner, R., Cunningham, B. V. (1965). Pintner-Cunningham Primary Test. New York: Harcourt, Brace and World.

- Sattler, J.M. (2001). Assessment of children: Cognitive applications (4th ed.). San Diego, CA: Jerome M. Sattler.

- Slosson, R. L. (1963). Slosson Intelligence Test. East Aurora, NY: Slosson Educational Publications.

- Su, C-Y., Charm, J-J., Chen, H-M., Su, C-J., Chien, T-H., Huang, M-H. (2000). Perceptual differences between stroke patients with cerebral infarction and intracerebral hemorrhage. Arch Phys Med Rehabil, 81, 706-714.

- Whiting, S., Lincoln, N., Bhavnani, G., Cockburn, J. (1985). The Rivermead perceptual assessment battery. Windsor: NFER-Nelson.

- York, C. D., Cermak, S. A. (1995). Visual perception and praxis in adults after stroke. Am J Occup Ther, 49(6), 543-550.

See the measure

The MVPT can be purchased from: https://www.therapro.com/

Trail Making Test (TMT)

Purpose

The Trail Making Test (TMT) is a widely used test to assess executive function in patients with stroke. Successful performance of the TMT requires a variety of mental abilities including letter and number recognition mental flexibility, visual scanning, and motor function.

In-Depth Review

Purpose of the measure

The Trail Making Test (TMT) is a widely used test to assess executive abilities in patients with stroke

, visual scanning

, and motor function.

Performance is evaluated using two different visual conceptual and visuomotor tracking conditions: Part A involves connecting numbers 1-25 in ascending order; and Part B involves connecting numbers and letters in an alternating and ascending fashion.

Available versions

The TMT was originally included as a component of the Army Individual Test Battery and is also a part of the Halstead-Reitan Neuropsychological Test Battery (HNTB).

Features of the measure

Description of tasks:

The TMT is comprised of 2 tasks – Part A and B:

- Part A: Consists of 25 circles numbered from 1 to 25 randomly distributed over a page of letter size paper. The participant is required to connect the circles with a pencil as quickly as possible in numerical sequence beginning with the number 1.

- Part B: Consists of 25 circles numbered 1 to 13 and lettered A to L, randomly distributed over a page of paper. The participant is required to connect the circles with a pencil as quickly as possible, but alternating between numbers and letters and taking both series in ascending sequence (i.e. 1, A, 2, B, 3, C…).

What to consider before beginning:

- The TMT requires relatively intact motor abilities (i.e. ability to hold and maneuver a pen or pencil, ability to move the upper extremity. The Oral TMT may be a more appropriate version to use if the examiner considers that the participant’s motor ability may impact his/her performance.

- Cultural and linguistic variables may impact performance and affect scores.

Scoring and Score Interpretation:

Performance is evaluated using two different visual conceptual and visuomotor tracking conditions: Part A involves connecting numbers 1-25 in ascending order; and Part B involves connecting numbers and letters in an alternating and ascending fashion.

Time taken to complete each task and number of errors made during each task are recorded and compared with normative data. Time to complete the task is recorded in seconds, whereby the greater the number of seconds, the greater the impairment.

In some reported methods of administration, the examiner pointed out and explained mistakes during the administration.

A maximum time of 5 minutes is typically allowed for Part B. Participants who are unable to complete Part B within 5 minutes are given a score of 300 or 301 seconds. Performance on Part B has not been found to yield any more information on stroke

| Ranges and Cut-Off Scores | ||

|---|---|---|

| Normal | Brain-damage | |

| TMT Part A | 1-39 seconds | 40 or more seconds |

| TMT Part B | 1-91 | 92 or more seconds |

Adapted from Reitan (1958) as cited in Matarazzo, Wiens, Matarazzo & Goldstein (1974).

Time:

Approximately 5 to 10 minutes

Training requirements:

No training requirements have been reported.

Equipment:

- A copy of the measure

- Pencil or pen

- Stopwatch

Alternative versions of the Trail Making Test

- Color Trails (D’Elia et al., 1996)

- Comprehensive Trail Making Test (Reynolds, 2002)

- Delis-Kaplan Executive Function Scale (D-KEFS) – includes subtests modeled after the TMT

- Oral TMT – an alternative for patients with motor deficits or visual impairments (Ricker & Axelrod, 1994).

- Repeat testing – alternate forms have been developed for repeat testing purposes (Franzen et al., 1996; Lewis & Rennick, 1979)

- Symbol Trail Making Test – developed as an alternative to the Arabic version of the TMT, for populations with no familiarity with the Arabic numerical system (Barncord & Wanlass, 2001)

Client suitability

Can be used with:

- Patients with stroke

Also called a "brain attack" and happens when brain cells die because of inadequate blood flow. 20% of cases are a hemorrhage in the brain caused by a rupture or leakage from a blood vessel. 80% of cases are also know as a "schemic stroke", or the formation of a blood clot in a vessel supplying blood to the brain. and brain damage.

Should not be used with:

- Patients with motor deficiencies. If motor ability may impact performance, consider using the Oral TMT.

In what languages is the measure available?

Arabic, Chinese and Hebrew

Summary

| What does the tool measure? | Executive function in patients with stroke |

| What types of clients can the tool be used for? | The TMT can be used with, but is not limited to, patients with stroke. |

| Is this a screening or assessment tool? |

Assessment tool |

| Time to administer | The TMT takes approximately 5 to 10 minutes to administer. |

| Versions |

|

| Other Languages | Arabic, Chinese and Hebrew |

| Measurement Properties | |

| Reliability |

Test-retest: Two studies examined the test-retest reliability of the TMT among patients with stroke . |

| Validity |

Content: One study examined the content validity of the TMT and found it to be a complex test that involves aspects of abstraction, visual scanning and attention. Criterion: Construct: Known groups: |

| Floor/Ceiling Effects | One study found Part A of the TMT to have significant ceiling effects. |

| Does the tool detect change in patients? | The responsiveness of the TMT has not formally been studied, however the TMT has been used to detect changes in a clinical trial with patients with stroke. |

| Acceptability | The TMT is simple and easy to administer. |

| Feasibility | The TMT is relatively inexpensive and highly portable. The TMT is public domain and can be reproduced without permission. It can be administered by individuals with minimal training in cognitive assessment. |

| How to obtain the tool? | The Trail Making Test (TMT) can be purchased from: http://www.reitanlabs.com |

Psychometric Properties

Overview

A literature search was conducted to identify all relevant publications on the psychometric properties of the Trail Making Test (TMT) involving patients with stroke

Floor/Ceiling Effects

In a study by Mazer, Korner-Bitensky and Sofer (1989) that investigated the ability of perceptual testing to predict on-road driving outcomes in patients with stroke, part A of the TMT was found to have significant ceiling effects. For this reason, Part A was excluded from study results as it was deemed too easy for participants when evaluating the ability of the TMT to predict on-road driving test outcomes. No ceiling effects for part B were found.

Reliability

Internal consistency

No studies were identified examining the internal consistency

Test-retest:

Matarazzo, Wiens, Matarazzo and Goldstein (1974) examined the test-retest reliability

of the TMT and other components of the Halstead Impairment Index with 29 healthy males and 16 60-year old patients with diffuse cerebrovascular disease. Adequate test-retest reliability

was found for both Part A and Part B of the TMT in the healthy control group (r=0.46 and 0.44 respectively), as calculated using Pearson correlation coefficients. Excellent and adequate test-retest reliability

was found for Part A and Part B of the TMT respectively (r=0.78 and 0.67), among participants with diffuse cerebrovascular disease.

Goldstein and Watson (1989) investigated the test-retest reliability

of the TMT as a part of the Halstead- Reitan Battery in a sample of 150 neuropsychiatric patients, including patients with stroke

Coefficients for the entire sample and for the sub-group of patients with stroke

for both Part A and Part B were found (0.94 and 0.86 respectively) in the sub-group of patients with stroke; and adequate reliability for the entire participant sample (0.69 and 0.66 respectively).

Intra-rater:

No studies were identified examining the intra-rater reliability

of the TMT in patients with stroke

Inter-rater:

No studies were identified examining the inter-rater reliability

of the TMT in patients with stroke

Validity

Content:

O’Donnell, MacGregor, Dabrowski, Oestreicher & Romero (1994) examined the face validity

of the TMT in a sample of 117 community-dwelling patients, including patients with stroke

and attention.

Criterion:

Concurrent:

No studies were identified examining the concurrent validity

of the TMT.

Predictive:

Mazer, Korner-Bitensky and Sofer (1998) examined the ability of the TMT and other measures of perceptual function to predict on-road driving test outcomes in 84 patients with subacute stroke

Devos, Akinwuntan & Nieuwboer (2011) conducted a systematic review

to identify the best determinants of fitness to drive following stroke

= 0.81, p<0.0001). In addition, when using a cutoff score of 90 seconds, the TMT Part B had a sensitivity

of 80% and a specificity

of 62% for detecting unsafe on-road performance. In a subsequent systematic review

by Marshall et al. (2007), the TMT was, again, found to be one of the most useful predictors of fitness for driving post-stroke.

Construct:

Convergent/Discriminant:

O’Donnell et al. (1994) examined the convergent validity

of the TMT and four other neuropsychological tests: Category Test (CAT), Wisconsin Card Sort Test (WCST), Paced Auditory Serial Addition Task (PASAT), and Visual Search and Attention Test (VSAT). The study involved 117 community-dwelling adults, including patients with stroke

Known groups:

Reitan (1955) examined the ability of the TMT to differentiate between patients with and without organic brain damage, including patients with stroke

Corrigan and Hinkeldey (1987) examined the relationship between Part A and Part B of the TMT. Data was collected from the charts of 497 patients receiving treatment at a rehabilitation centre. Patients with traumatic brain injury and stroke

to differences in cerebral lateralization of damage.

Tamez et al. (2011) examined the effects of frontal versus non-frontal stroke

Sensitivity/ Specificity:

No studies were identified examining the specificity

of the TMT in patients with stroke

Responsiveness

Barker-Collo, Feigin, Lawes, Senior and Parag (2000) assessed the course of recovery of attention span in 43 patients with acute stroke

of the TMT was not formally assessed in this study, the scale was sensitive enough to detect an improvement in attention at 6 weeks and 6 months following stroke

References

- Barker-Collo, S., Feigin, V., Lawes, C., Senior, H., & Parag, V. (2010). Natural history of attention deficits and their influence on functional recovery from acute stages to 6 months after stroke.Neuroepidemiology, 35(4), 255-262.

- Barncord, S.W. & Wanlass, R.L. (2001). The Symbol Trail Making Test: Test development and utility as a measure of cognitive impairment. Applied Neuropsychology, 8, 99-103

- Corrigan, J. D. & Hinkeldey, N. S. (1987). Relationships between Parts A and B of the Trail Making Test. Journal of Clinical Psychology, 43(4), 402-409.

- D’Elia, L.F., Satz, P., Uchiyama, C.I. & White, T. (1996). Color Trails Test. Odessa, Fla.:PAR.

- Devos, H., Akinwuntan, A. E., Nieuwboer, A., Truijen, S., Tant, M., & De Weerdt, W. Screening for fitness to drive after stroke: a systematic review and meta-analysis.Neurology, 76(8), 747-756.

- Elkin-Frankston, S., Lebowitz, B.K., Kapust, L.R., Hollis, A.M., & O’Connor, M.G. (2007). The use of the Colour Trails Test in the assessment of driver competence: Preliminary reports of a culture-fair instrument. Archives of Clinical Neuropsychology, 22, 631-635.

- Goldstein, G. & Watson, J.R. (1989). Test-retest reliability of the Halstead-Reitan Battery and the WAIS in a Neuropsychiatric Population. The Clinical Neuropsychologist, 3(3), 265-273.

- O’Donnell, J.P., Macgregor, L.A., Dabrowski, J.J., Oestreicher, J.M., & Romero, J.J. (1994). Construct validity of neuropsychological tests of conceptual and attentional abilities. Journal of Clinical Psychology, 50(4), 596-560.

- Mark, V. W., Woods, A. J., Mennemeier, M., Abbas, S., & Taub, E. Cognitive assessment for CI therapy in the outpatient clinic.Neurorehabilitation, 21(2), 139-146.

- Marshall, S.C., Molnar, F., Man-Son-Hing, M., Blair, R., Brosseau, L., Finestone, H.M., Lamothe, C, Korner-Bitensky, N., & Wilson, K. (2007). Predictors of driving ability following stroke: A systematic review. Topics in Stroke Rehabilitation, 14(1):98-114.

- Matarazzo, J.D., Wiens, A.N., Matarazzo, R.G., & Goldstein, S.G. (1974). Psychometric and clinical test-retest reliability of the Halstead Impairment Index in a sample of healthy, young, normal men. The Journal of Nervous and Mental Disease, 188(1), 37-49.

- Mazer, B.L., Korner-Bitensky, N.A., & Sofer, S. (1998). Predicting ability to drive after stroke. Archives of Physical Medicine and Rehabilitation, 79, 743-750.

- Mazer, B.L., Sofer, S., Korner-Bitensky, N., Gelinas, I., Hanley, J. & Wood-Dauphinee, S. (2003). Effectiveness of a visual attention retraining program on the driving performance of clients with stroke. Archives of Physical Medicine and Rehabilitation, 84, 541-550.

- Reitan, R.M. (1955). The relation of the Trail Making Test to organic brain damage. Journal of Consulting Psychology, 19(5), 393-394.

- Reynolds, C. (2002). Comprehensive Trail Making Test. Austin, Tex,: Pro-Ed.

- Ricker, J.H. & Axelrod, B.N. (1994). Analysis of an oral paradigm for the Trail Making Test. Assessment, 1, 47-51.

- Strauss, E., Sherman, E.M.S., & Spreen, O. (2006).A Compendium of neuropsychological tests: Administration, norms, and commentary.(3rd. ed.).NY. Oxford University Press.

- Tamez, E., Myersona, J., Morrisb, L., Whitea, D. A., Baum C., & Connor, L. T. (2011). Assessing executive abilities following acute stroke with the trail making test and digit span.Behavioural Neurology, 24(3), 177-185.

See the measure

How to obtain the Trail Making Test (TMT)?

The Trail Making Test (TMT) can be purchased from:

Reitan Neuropsychology Laboratory

P.O. Box 66080

Tucson, AZ

85728